NFDI4Earth System Architecture Documentation¶

Here we provide a documentation of the NFDI4Earth software architecture. The documentation summarizes the major user cases, gives an overview on the requirement analysis approaches for the main NFDI4Earth software components, points out envisioned quality goals and potential architecture’s stakeholders. Getting more specific, the documentation provides externally and internally driven constraints that need to be considered when developing or contributing to NFDI4Earth software components. We herewith facilitate, the users to better understand the solution strategy for the NFDI4Earth architecture. We use the arc42 template for the documentation of software and system architecture. The detailed description of the NFDI4Earth architecture is done by using a blackbox-whitebox approach that describes the major aim, the main functionalities and interfaces of the components as seen by the users first (blackbox) and then provides details on the (inner) solutions, e.g., sub-components, implementations (whitebox). The envisioned target group of the documentation includes software developers and architects as well service providers.

The architecture documentation will be updated regularly. The first version of the NFDI4Earth architecture documentation is published on Zenodo: Degbelo, A., Frickenhaus, S., Grieb, J., Hachinger, S., Henzen, C., Klammer, R., Müller, C., Munke, J., Nüst, D., Purr, C., Weiland, C., & Wellmann, A. (2023). NFDI4Earth Software Architecture. Zenodo. https://doi.org/10.5281/zenodo.8333959 (Authors in alphabetical order)

Contact: Christin Henzen or the NFDI4Earth Architecture Team with your questions and comments.

Introduction and Goals¶

The mission of NFDI4Earth is to address the digital needs of the Earth System Sciences (ESS) for more FAIRness and Openness in ESS research. We develop several components, services and concepts within NFDI4Earth. These products help researchers, data experts, and software specialists to discover, access, and analyse relevant Earth data and related publications or tools.

NFDI4Earth supports the following (main) use cases with common services:

1. Discover and explore earth data sources

2. Support data publication and data curation

3. Solve a research data management problem

4. Create and publish information products, e.g., as services

The architecture of the NFDI4Earth describes the different software components built to make resources from the Earth System Sciences (ESS) findable, accessible, interoperable and reusable, as well as the requirements for interfaces enabling their interaction.

Requirements Overview¶

The following table provides an overview of NFDI4Earth software components, chosen approaches and concepts:

| Product | Summary | Approaches | Concept |

|---|---|---|---|

| Knowledge Hub (KH) | manages structured and interlinked metadata | Question-based approach | https://doi.org/10.5281/zenodo.7950859 |

| OneStop4All (OS4A) | acts as the primary visual and user-friendly NFDI4Earth access point | User-centred design and scenario-based approach | The related deliverable D4.3.1 is work-in-progress |

| Living Handbook (LHB) | stores and manages web-based, interactive articles about topics relevant to the community and the documentation of the NFDI4Earth products | Scenario-based approach | |

| User Support Network (USN) | is a distributed, cross-institutional helpdesk | Help desk concept based on longstanding practical experiences | https://doi.org/10.5281/zenodo.7886568 |

| Education and Training Materials and Services (EduTrain) | stores and manages educational resources of the ESS community | Focus group, requirement analysis | https://doi.org/10.5281/zenodo.7940194 |

| Pilots | are small projects from various disciplines of the ESS community | Specific approaches based on the individual pilot project’ needs | https://doi.org/10.5281/zenodo.7895674 |

| Incubators | are small innovative blue-sky projects to scout for new trends and opportunities | Specific approaches based on the individual incubator project’ needs | https://git.rwth-aachen.de/nfdi4earth/pilotsincubatorlab/incubator |

Quality Goals¶

In this section, we describe quality goals synonymously used as a term to describe architecture goals with a long-term perspective. As the NFDI4Earth architecture is evolving, we envision to regularly evaluate the prioritization of the quality goals Following the ISO25010 on software product quality, we consider the following quality goals for the NFDI4Earth software architecture:

| Priority | Goal | Description |

|---|---|---|

| 1 | Functional suitability | Degree to which the architecture provides functions that meet stated and implied needs when used under specified conditions |

| 2 | Maintainability | Degree of effectiveness and efficiency with which the architecture can be modified to improve it, correct it or adapt it to changes in environment, and in requirements |

| 3 | Usability | Degree to which a component can be used by specified users to achieve specified goals with effectiveness, efficiency and satisfaction in a specified context of use. |

Further goals

Based on ISO 25010, with the following goals being also important but not ranked in the top three:

- Performance efficiency

- Compatibility

- Interoperability of provided interfaces

- Reliability

- Security

- Portability

- Innovations have units of adoption (see Rogers, 2003). These can be individuals (e.g., a researcher) or organizations (e.g., a research institute that commits to operate a service after its development). We anticipate that our architecture can serve as a blueprint for the organizations (e.g., consortia) interested in realizing research data infrastructures.

Stakeholders¶

We envision the following main roles for using the NFDI4Earth architecture documentation:

| Role/Name | Expectations |

|---|---|

| Internal developers of NFDI4Earth components | Find descriptions and specifications of other NFDI4Earth components and integrate the components within their own projects |

| External developers | Find specifications on how to use existing NFDI4Earth components and find descriptions on how to add components to the NFDI4Earth infrastructure |

Further roles and details may be defined by products/components in the building block view.

Architecture Constraints¶

The following requirements constrain the design and implementation decisions and processes for the NFDI4Earth software architecture:

| Constraint | Type | Explanation |

|---|---|---|

| NFDI4Earth Proposal | organisational, strategic and technical | The proposal provides the context and aims of the NFDI4Earth software architecture (see https://doi.org/10.5281/zenodo.571894) |

| NFDI integration and interoperability | technical, strategic | The NFDI4Earth software architecture must fit with relevant activities of the overall NFDI, e.g., with base service initiatives |

| International integration and interoperability | technical, strategic | The NFDI4Earth software architecture will be embedded in international infrastructures |

| Developer expertise, research interests, and availability | organisational, technical | The expertise, research interests, and availability of a distributed software developer team affect the software project management as well as the technology decisions |

| Architecture team | organisational | Software decisions for NFDI4Earth software components are made by the NFDI4Earth architecture team on suggestion and on agreement with the measure leads of the relevant component |

| Developer team | organisational, conventions | NFDI4Earth cross-product implementations, guidance and conventions will be provided by the software developer team |

| FAIR principles | technical | NFDI4Earth software components should support the implementation of the FAIR principles |

| Free and Open Source Software | technical and strategical | NFDI4Earth components must be provided as Free and Open Source (FOSS). Used open-source solutions must be well established, documented and maintained, e.g., guaranteed by their long-term applicability whenever possible. |

| Open standards / specifications | technical | NFDI4Earth software components will reuse existing (preferably) or define open standards or specifications for all interfaces, when interfaces are relevant for the component |

| Loosely coupled components | technical | NFDI4Earth software components must be loosely coupled to allow replacing software solutions, e.g., as agile adaptions to user needs and changing requirements or newly developed NFDI-wide solutions |

| Software repository | organisational | The source code of the NFDI4Earth software components must be managed in a software repository that allows contributions from (relevant) NFDI4Earth participants |

| Hosting at TU Dresden | technical, organisational | The NFDI4Earth software components will be hosted at TU Dresden Enterprise cloud and maintained by following the respective guidelines / regulations (https://git.rwth-aachen.de/nfdi4earth/softwaretoolsarchitecture/devguide). |

| Containerization | technical | NFDI4Earth software components will run in virtual containers, whenever possible. |

Types: technical constraints, organisational constraints, strategic constraints, and conventions (e.g., programming or versioning guidelines, documentation or naming conventions).

System Scope and Context¶

Today, there are numerous scattered and heterogeneous services supporting RDM in ESS. Most of them are project-based and do not have a long-term perspective. In addition, the implementation of RDM concepts, FAIR principles and related concepts differ among researchers, institutions, and disciplines. NFDI4Earth will contribute to aligning existing and emerging RDM services along FAIR and Openness principles and to working towards a unifying and long-term perspective for services. Thus, NFDI4Earth targets the consolidation and harmonization of research data-related services in ESS and linking these services into the NFDI4Earth software architecture (see https://doi.org/10.5281/zenodo.571894). Moreover, the NFDI4Earth architecture will provide information (metadata) for several resource types in human- and machine-readable manners. The following list will be extended, when needed:

- Repositories / Catalogues (for data and services)

- Services (with an interface)

- Datasets

- Software / Tools

- Code (snippets)

- Documents (e.g., reports, data management plans, …)

- Scientific publications

- Vocabularies

- Standards and Specifications

- Descriptions for networks / initiatives, organizations, projects

- Mixed types (e.g., EduTrain materials with slides, code, …)

We envision to harvest - existing information via existing application programming interfaces (APIs). However, for some resource types, information still needs to be collected/maintained manually.

The list of integrated (meta)data sources will be continuously adapted with respect to the following main goals:

- adding further sources to provide additional high-quality (meta) data or to reduce the number of manually collected (meta) data,

- linking metadata records and minimize duplication of information with respect to the quality of metadata

- remove a source when it gets harvested by another source that is integrated in the NFDI4Earth software architecture or when the source is no longer maintained

The development of the NFDI4Earth architecture is supported and influenced by several external drivers. For instance, the architecture will reuse / integrate well-known and accepted existing components and resources from the ESS community and infrastructure providers. Moreover, the NFDI4Earth architecture will benefit and contribute to the activities of the national research data infrastructure (NFDI) in case of 1) consuming / integrating NFDI base services and 2) developing strategies for reusing / integrating software components across NFDI consortia.

The following aspects are beyond the scope of the NFDI4Earth architecture:

- Guarantee for availability and functional correctness of integrated services: NFDI4Earth cannot ensure availability and functional correctness of integrated services. However, existing information on both aspects will be made available for the users.

- Correctness of harvested or linked (meta) data: Harvested or linked (meta) data are provided as-is by referencing the data source and information on curation / quality assurance when available

- Completeness of harvested or linked (meta) data: NFDI4Earth integrates existing services with no claim to completeness.

- Up-to-dateness of information: NFDI4Earth will systematically evaluate several information during the project period. Due to the amount of harvested and provided information, NFDI4Earth will apply different strategies for the different resource types and data sources, e.g., a community approach to update or to notify needs for information update for exclusively provided (within NFDI4Earth manually collected) information.

- Compliance for specifications and standards: NFDI4Earth will not check compliance for external sources, but will provide existing information from the providers.

- Hosting and maintenance of all existing (external) project-based ESS services after funding: NFDI4Earth will not host and maintain all existing (external) ESS services after the end of the services’ project periods, but will first evaluate a proposed service against relevant functional and non-functional requirements.

Solution Strategy¶

The NFDI4Earth software architecture features a resource layer (data sources), a data management layer, a semantically-enriched metadata layer, and an application layer. An important feature of the resource layer is that all metadata about the research products are stored in such a way that they are accessible through an Application Programming Interface (API). Living Handbook articles are stored in the NFDI4Earth Gitlab and EduTrain materials are stored in a learn management system (LMS) - both will be harvested through their APIs. Documents (e.g., scientific publications, deliverables) can be stored in Zenodo and will then be harvested through its API. External data sources (e.g., catalogues/registers) with an API will be integrated to the NFDI4Earth architecture. The Knowledge Hub is the only component of the semantically-enriched metadata layer. It stores all metadata in RDF (Resource Description Framework) and is accessible through a SPARQL API. The communication between the OneStop4All and the Knowledge Hub happens through that SPARQL API. The implementation of the semantically-enriched and application layers happens in NFDI4Earth through open-source software.

Resource layer¶

An important feature of the resource layer is that all metadata about the research products are stored in such a way that they are accessible through an API. Documents (e.g., scientific publications, deliverables) can be stored in Zenodo and will then be harvested through its API. External data sources (e.g., catalogues/registers) with an API will be integrated to the NFDI4Earth architecture.

Data management layer¶

Through the use of a data management layer that stores all manually-created and harvested metadata in Cordra, the architecture supports the management and provision of FAIR digital objects.

Semantically-enriched metadata layer¶

The Knowledge Hub is the only component of the semantically-enriched metadata layer. It stores all metadata in RDF (Resource Description Framework) and is accessible through a SPARQL API.

Product layer¶

The OneStop4All provides a single entry point to find and explore ESS resources. The communication between the OneStop4All and the Knowledge Hub happens through the Knowledge Hub's SPARQL API.

The Living Handbook provides an editorial workflow to generate high-quality content for users and ensures giving credit to attract authors. Living Handbook articles are stored in GitHub/Gitlab and will be harvested through their APIs.

The EduTrain provides access to education and training materials and services. EduTrain materials are stored in GitHub/Gitlab and will be harvested through their APIs.

The User Support Network is a distributed helpdesk based on a ticket system (OTRS) provided by TU Dresden.

The Pilots are small projects that provide solutions for specific needs of the ESS community. They will be made discoverable through the OneStop4All and integrated in the NFDI4Earth architecture whenever possible (see Requirements Overview)

The Incubator projects are small blue-sky projects on innovative ESS RDM approaches with an experimental character. They will be made discoverable through the OneStop4All and their source code and descriptions will be published for reuse by the ESS community.

Building Block View¶

Whitebox Overall System¶

The NFDI4Earth software architecture provides two services that serve as entry points to several linked NFDI4Earth and community-developed services: the OneStop4All as human-readable interactive user interface and the Knowledge Hub as machine-readable interface.

Knowledge Hub¶

The blackbox description is based on the Knowledge Hub concept one-pager and concept deliverable (https://doi.org/10.5281/zenodo.7950859; https://doi.org/10.5281/zenodo.7583596).

The NFDI4Earth Knowledge Hub serves as one major backend service of NFDI4Earth. It integrates metadata about all NFDI4Earth resources and is accessed via an API.

| Problem | Research products from the Earth System Sciences (ESS) are increasingly difficult to find. There is a need for tools that automate their discovery. ‘Research products’ is used here as a catch-all term that includes 1) datasets, 2) services, 3) tools, 4) vocabularies, 5) reports, 6) scientific papers, and 7) peer reviews. |

| Innovations | Structured and interlinked metadata for ESS resources produced in NFDI4Earth or relevant for the NFDI4Earth. These ESS resources can be any research product listed above, an article of the Living Handbook, or an educational material from the EduTrain. We use RDF (Resource Description Framework) as an encoding format. Structured and interlinked metadata for ESS resources hosted by NFDI4Earth partners NFDI4Earth label - compiled based on the available metadata - as an indicator of the extent to which services are FAIR, and in particular, the degree of interoperability of the services |

| Users | Consumers: people who have basic skills in programmatic data access (i.e., they are able to program a short snippet of code in a programming language to retrieve data). Producers: these create/edit metadata for the Knowledge Hub. They may have programming skills (in which case they create/edit metadata via the API of the Knowledge Hub) or have no programming skills (in which case they do the creation/editing via a user interface). |

| Interface(s) | SPARQL API |

| Unit of adoption | Individuals (e.g., data scientists); Organizations (indirectly, as a by-product of the adoption by individuals) |

OneStop4All¶

The blackbox description is based on OneStop4All concept one-pager https://doi.org/10.5281/zenodo.7583596.

The NFDI4Earth OneStop4All is the primary visual and user-friendly NFDI4Earth access point.

| Challenges | Research products from the ESS community are diverse and increasingly difficult to find. There is thus a need for platforms that efficiently organize the access to ESS resources, in particular quality-assured resources. These platforms should be: User-friendly and easy-to-use, taking specific user characteristics and needs into account Flexible enough to integrate future RDM services (e.g., address multidisciplinary use cases with other NFDIs, link to EOSC services). |

| Innovations | Central search on NFDI4Earth resources and distributed sources, including relevant governmental, research and other open data sources Innovative user interfaces to explore the linked ESS resources that adapt to the needs of different user groups Intelligent functionality to connect Living Handbook information for registered resources Seamless transition from machine-based to human-based support A community tool fostering the sharing of high-quality information and resources |

| Users | We envision the following types of primary users: Users, who are looking for ESS research and ESS RDM information, e.g., events, networks Users, who are looking for support, e.g., on NFDI4Earth tools or on how to use NFDI4Earth services Users, who want to offer information/research products Users, who want to provide feedback on the content |

| Interface(s) | User interface |

| Unit of adoption | Individuals |

Whitebox NFDI4Earth Products¶

White Box Knowledge Hub¶

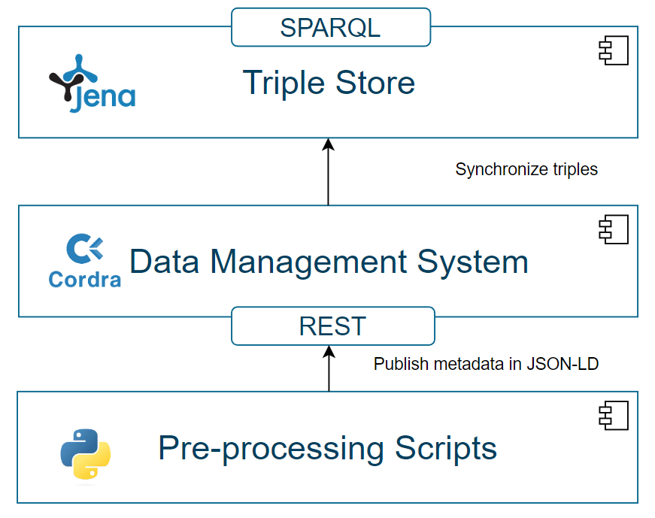

The NFDI4Earth Knowledge Hub consists of three building blocks to harvest, process and provide metadata. The pre-processing scripts mainly provide pipelines to harvest data sources or populate manually collected metadata, map metadata to the NFDI4Earth schemas and add/update the harmonised metadata in the data management system. These scripts are written in Python and provided as open-source in the NFDI4Earth GitLab. Through the use of a data management system that stores all manually-created and harvested metadata, the NFDI4Earth software architecture supports the management and provision of FAIR digital objects. The triple store stores all metadata as semantically-enriched metadata in RDF (Resource Description Framework) and is accessible through a SPARQL API. The implementation of the data management system and the triple store happens in NFDI4Earth through open-source software.

White Box OneStop4All¶

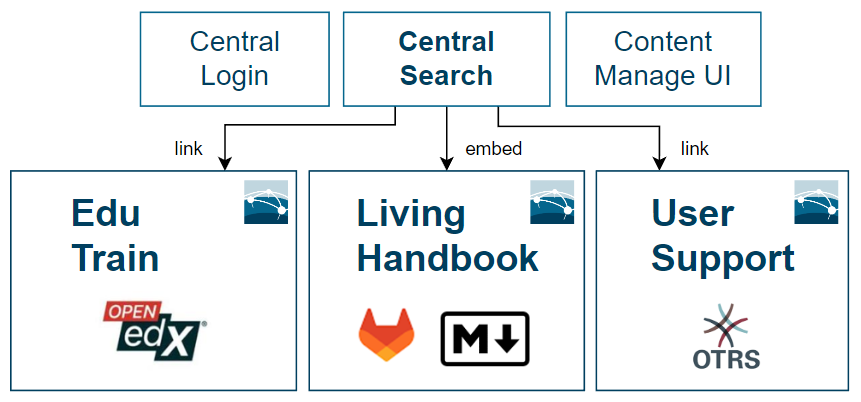

The OneStop4All provides the Web frame for all NFDI4Earth user interface (UI) components. Thus, the OneStop4All links or embeds the EduTrain learning management system and the Living Handbook user interfaces with respect to a user-friendly navigation and a common look&feel for all NFDI4Earth UI components and provides access to the User Support Network via Web form. By doing so, the OneStop4All does not provide the exclusive, but an additional access point for all other NFDI4Earth software components with user interfaces. The central search on all NFDI4Earth resources provides the core functionality of the OneStop4All. The OneStop4All is implemented as a custom solution.

EduTrain¶

The blackbox description is based on the EduTrain concept one-pager https://doi.org/10.5281/zenodo.7583596.

The NFDI4Earth EduTrain will provide a comprehensive overview on existing education and training material and will provide FAIR, Open, ready-to-use modular course material that are developed by the EduTrain team based on the community's needs.

| Problem | A lack of FAIR and open educational resources is one of the biggest obstacles to scientific activities. Although substantial effort has already been put into developing Open Educational Resources (OERs), many issues still exist, e.g., peer-reviewing the content, maintenance responsibility, quality control, management, and lack of funding for the development and maintenance. Another major problem is that most existing FAIR principles and Open Science materials are generic. At the same time, ESS-specific materials that outline adapting the FAIR principles and Open Science concepts are highly needed but mainly missing. |

| Innovations | Development and maintenance of OERs and curriculum based on regular educational needs assessment of the ESS community Continuous collection and evaluation of existing OERs in research data management tailored for ESS, spatio-temporal data literacy, and spatio-temporal data science Funding the development of new open-licensed materials to meet the educational needs of the ESS community by publishing calls for educational pilots Development of target group-specific curricula |

| Users | Scientists, ranging from early-career researchers (Ph.D. students, Post-Docs) to experienced senior scientists and professors Master students Bachelor students Educators and training professionals (e.g., professors, lecturers, teaching assistants) |

| Interface(s) | User interfaces |

| Unit of adoption | Individuals Higher education institutions Research centres |

Living Handbook¶

The blackbox description is based on the Living Handbook concept one-pager https://doi.org/10.5281/zenodo.7583596.

The NFDI4Earth Living Handbook provides an interactive Web-based documentation for all aspects related to the NFDI4Earth, its services and outcomes.

| Problem | Many researchers, societies, funding agencies, companies, authorities, or the interested public are not familiar with each aspect of the NFDI4Earth, its services, or ESS research data in general. A core service with overview documents of such topics is, hence, required. The various user needs, and prior knowledges must be reflected in these documents, i.e., these must provide a flexible granularity, from being brief and informal to being comprehensive and detailed. |

| Innovations | Structuring and harmonizing all aspects of NFDI4Earth as well as ESS related information from different, also previously unpublished, sources. Curate and present information about the NFDI4Earth as a collection of edited, inter-linked, human-readable documents of various types (documentation, report, article, manual, tutorial, ed-op, etc.) that are externally linked with general ESS resources. Compilation of documents tailored to the different proficiency levels and backgrounds of readers by a combination of automatic re-combination and re-arrangement of the document's elements. |

| Users | Consumers: Users with interest in NFDI4Earth, NFDI or else ESS related information, data, services, concepts, software etc. We expect users with a high variety in their backgrounds and prior knowledge. Editors/authors: Persons that provide and regularly quality check the LHB contents. |

| Interface(s) | User interface |

| Unit of adoption | The LHB will be beneficial to, e.g.,: Researchers as a manual how to use NFDI4Earth and related external products and to learn what the scope of the NFDI4Earth and its services are. Scientific and professional societies as a place to refer their members as a resource for ESS data related topics. Funding agencies to understand how researchers are using and providing ESS data Authorities to get and provide information about ESS data The interested public as the first stop to find ESS related information |

User Support Network¶

The blackbox description is based on the User Support Network concept one-pager https://doi.org/10.5281/zenodo.7583596.

The NFDI4Earth User Support Network will provide distributed, cross-institutional user support based on the services of the existing partner institutions’ services and the upcoming NFDI4Earth innovations.

| Challenges | Research data services from the Earth system sciences community are diverse and until now mainly directed to a smaller community, e.g., an institute. We will work on a structure in the USN that allows to map the different resources and to access them. The USN will also evaluate if an open community support system (like Stackoverflow) will be of value next to the institutional RDM support of the USN team. To work on that evaluation, we need a solid idea of what kind of user questions are ask-ing, which we expect to get by running the ticketing system. |

| Innovations | Single point of access to a national expert pool offering individual support for ESS RDM problems for all phases of the data lifecycle Collection, harmonization and provision of expert knowledge based on institutional experience, e.g., via Living Handbook Creation of standard operation procedures (SOPs) for user support |

| Users | We envision the following types of primary users: Users, who are looking for general information, e.g., on NFDI4Earth tools or on how to use NFDI4Earth services Users, who are looking for support in ESS research data management (RDM) |

| Interface(s) | User interface |

| Unit of adoption | Individuals Research institutions |

Pilots¶

The blackbox description is based on the Pilots concept one-pager https://doi.org/10.5281/zenodo.7583596.

The NFDI4Earth Earth System Science (ESS) Pilots are small projects from various disciplines of the ESS community usually lasting for one year. Pilots are used to assess and define requirements in other task areas and promising results will be integrated into the NFDI4Earth infrastructure.

| Problem | To achieve acceptance and adoption of the community as well as a cultural change, NFDI4Earth must not implement top-down solutions but involve ideas and existing tools from the research community. Different domains of ESS face different challenges in interoperability, standardization of data, methods and workflows. Expertise and technologies are existent but need further development to meet domain specific requirements and often lack transferability for usage beyond a small user group. |

| Innovations | Agile projects that directly reflect researchers' needs in data management and implement novel solutions for research data management Bottom-up innovation scouts for other Task Areas of NFDI4Earth Focus on transferability of results and enhancement of technologies to make use of existing resources and foster community driven design of NFDI4Earth |

| Users | The target community are researchers from the ESS community working on tools that enhance research data management. The solutions implemented from the pilots are targeted to the respective scientific community. |

| Interface(s) | Depending on the individual pilot proposals |

| Unit of adoption | NFDI4Earth takes up pilots' results into their infrastructure User communities of different domains that adopt the newly de-veloped tools by pilots |

| # Runtime View |

This section will be updated at a later stage

Deployment View¶

This section will be updated at a later stage. Please contact Ralf Klammer (ralf.klammer@tu-dresden.de) for more details.

Cross-cutting Concepts¶

This section will be updated at a later stage including

- Developer's guide

- Certificate strategy

- CI/CD implementation

- Identy management

Architecture Decisions¶

Decision process¶

As described in the NFDI4Earth proposal, the Measure 4.3 Central Support Services for the federated NFDI4Earth Community is responsible for the Support and Coordination of Software Developments (Action 5). One major goal of this action is to ensure a coherent software development within and for NFDI4Earth. Software decisions concern the use and purpose of software solutions (what and how) that are parts of the emerging NFDI4Earth software components and whose use affects the NFDI4Earth community directly or prospectively. The software decisions are made by the NFDI4Earth Architecture Team on the proposal of and in consultation with the measure leads responsible in each case. This ensures that the chosen tools and systems fit into the overall software ecosystem and the NFDI4Earth software architecture concept. The software solutions selected for NFDI4Earth software products should meet essential functional and non-functional requirements of the respective software component or be the best available basis for further development. Moreover, it should meet the software constraints 2 and 7-11 (see Section Architecture Constraints). Software solutions chosen for an NFDI4Earth software component can be changed at any time - again in consultation with the NFDI4Earth Architecture Team - if there are appropriate reasons for doing so (e.g., better solutions on the market or changes in requirements). Decisions on software solutions are made by the NFDI4Earth Architecture Team within 14 days. The request for a software decision to the NFDI4Earth Architecture Team is usually made by a measure lead. In the case of unresolvable conflicts or diverging ideas on software decisions between the measure leads and the NFDI4Earth Architecture Team, the NFDI4Earth Architecture Team prepares a decision proposal for the steering group, which then acts as the decision-making authority. Software decisions are documented by the initiating measure leads including the time of the decision, the NFDI4Earth related software products and a short explanation taking into account the above-mentioned criteria for software selection and the made decision. This documentation is edited by the NFDI4Earth Architecture Team and published in the form of a management version in the software architecture project of the NFDI4Earth GitLab.

The NFDI4Earth Architecture Team consists of Auriol Degbelo, Christin Henzen, Carsten Keßler, Ralf Klammer, Daniel Nüst, Claus Weiland and can be contacted via nfdi4earth-architecture@tu-dresden.de

Decisions¶

Fusekias the triple store for the Knowledge Hub [^1]CORDRAas middleware for metadata and data management for the Knowledge HubMkDocsas a static site generator for the Living HandbookOTRSas ticketing system for the User Support NetworkOpenEdxas EduTrain learn management system- A custom

Reactsolution is implemented for the OneStop4All

[^1] We expect this decision to change as Fuseki might not be able to scale as the number of managed triples grows rapidly

Risks and Technical Debts¶

Risks and technical debts to be added here as they come up. The systematic detection and evaluation of risks and technical debts in the architecture will serve management stakeholders in the overal risk analysis and planning.

| Name | Priority (high, medium, low) | Measures to minimize, mitigate, avoid, or reduce |

|---|---|---|

| ... | ... | ... |

Priorities: high risks or debts are critical and can break the whole project, medium risks or debts can cause serious extra efforts but can be fixed even if one must go outside of the given resources, and low risks or debts are uncertainties that can be handled within the normal project resources.

Acknowledgements¶

This specification and guides are developed by the members of the project NFDI4Earth - https://nfdi4earth.de/. This work has been funded by the German Research Foundation (DFG) through the project NFDI4Earth (TA4 M4.3, DFG project no. 460036893) within the German National Research Data Infrastructure (NFDI, https://www.nfdi.de/).

To cite this specification please use the first published version of the NFDI4Earth architecture documentation: Degbelo, A., Frickenhaus, S., Grieb, J., Hachinger, S., Henzen, C., Klammer, R., Müller, C., Munke, J., Nüst, D., Purr, C., Weiland, C., & Wellmann, A. (2023). NFDI4Earth Software Architecture. Zenodo. https://doi.org/10.5281/zenodo.8333959 (Authors in alphabetical order)

For a complete list of publications, posters, presentations, and software projects by NFD4Earth, please visit https://nfdi4earth.de/outputs.

About arc42¶

arc42, the Template for documentation of software and system architecture.

Template Version 8.2 EN. (based upon AsciiDoc version), January 2023

Created, maintained and © by Dr. Peter Hruschka, Dr. Gernot Starke and contributors. See https://arc42.org.

License¶

The NFDI4Earth architecture specification is licensed under Creative Commons CC0 1.0 Universal License, see file LICENSE.

To the extent possible under law, the people who associated CC0 with this work have waived all copyright and related or neighboring rights to this work.

This work is published from: Germany.